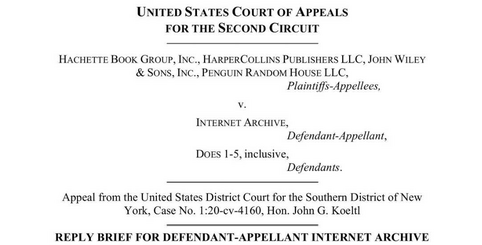

On April 19th, The Internet Archive filed the final brief in their appeal of the "Hachette v. Internet Archive" lawsuit (for which, judgment was handed down, against Internet Archive, last year).

What is curious, is that this final brief fails -- almost completely -- to reasonably address the core issues of the lawsuit. What's more, the public statements that followed, by The Internet Archive, appeared to be crafted to drum up public sympathy by misrepresenting the core of the case itself.

Which suggests that The Internet Archive is very much aware that they are likely to lose this appeal.

After a careful reading of the existing public documents relating to this case... it truly is difficult to come to any other conclusion.

The Internet Archive does some critically important work by archiving, and indexing, a wide variety of culturally significant material (from webpages to decades old magazine articles). In this work, they help to preserve history. A extremely noble, and valuable, endeavor. Which makes the likelihood of this legal defeat all the more unfortunate.

What is "Hachette v. Internet Archive"?

Here's the short-short version of this lawsuit:

The Internet Archive created a program they called "Controlled Digital Lending" (CDL) -- where a physical book is scanned, turned into a digital file, and that digital file is then "loaned" out to people on the Internet. In 2020, The Internet Archive removed what few restrictions existed with this Digital Lending program, allowing an unlimited number of people to download the digital copy of a book.

The result was a group of publishers filing the "Hachette v. Internet Archive" lawsuit. That lawsuit focused on two key complaints:

- The books were "digitized" (converted from physical to digital form) -- and distributed -- without the permission of the copyright holders (publishers, authors, etc.).

- The Internet Archive received monetary donations (and other monetary rewards) as a result of freely distributing said copyrighted material. Again, without permission of the copyright holders. Effectively making the Internet Archive's CDL a commercial enterprise for the distribution of what is best described as "pirated material".

That lawsuit was decided, against The Internet Archive, in 2023 -- with the judge declaring that "no case or legal principle supports" their defense of "Fair Use".

That judgment was appealed by The Internet Archive. Which brings us to today, and thier final defense (in theory).

What is the final defense of The Internet Archive?

Let's take a look at the final brief in The Internet Archive's bid to appeal this ruling.

The general defense of The Internet Archive is fairly simple: The Internet Archive's "Controlled Digital Lending" falls under "Fair Use". And, therefor, is legal.

Let's look at two of the key arguments within the brief... and the issues with them.

Not "For Anyone to Read"

"Controlled digital lending is not equivalent to posting an ebook online for anyone to read"

This argument -- part of the brief's Introduction -- is quite a strange defense to make.

The "Controlled Digital Lending" program, starting in March of 2020, literally posted a massive book archive "online for anyone to read". This was branded the "National Emergency Library".

Good intentions aside, the Internet Archive is now attempting to claim that they did not do... the exact thing that they proudly did (they even issued press releases about how they did it).

Good intentions aside, the Internet Archive is now attempting to claim that they did not do... the exact thing that they proudly did (they even issued press releases about how they did it).

As such, I don't see a judge being swayed by this (poorly thought out) argument.

"Because of the Huge Investment"

"... because of the huge investment required to operate a legally compliant controlled lending system and the controls defining the practice, finding fair use here would not trigger any of the doomsday consequences for rightsholders that Publishers and their amici claim to fear."

Did you follow that?

The argument here is roughly as follows:

"It costs a lot of money to make, and distribute, digital copies of books without the permission of the copyright holder... therefore it should be legal for The Internet Archive to do it."

An absolutely fascinating defense. "Someone else might not be able to commit this crime, so we should be allowed to do it" is one of the weirdest defences I have ever heard.

Again, I doubt the judge in this case is likely to be convinced by this logic.

There are many other arguments made within this final brief -- in total, 32 pages worth of arguments. But none were any more convincing -- from a logical perspective -- than the two presented here. In fact, most of the arguments tended to be entirely unrelated to the core lawsuit and judgment.

The Court of Public Opinion

Let's be honest: The Internet Archive looks destined to lose this court battle. They lost once, and their appeal is, to put it mildly, weak.

Maybe you and I are on the side of The Internet Archive. Maybe we are such big fans of Archive.org that we want to come to their defense.

But feelings don't matter here. Only facts. And the facts are simple. The Archive's actions and statements (and questionable legal defense) have all but ensured a loss in this case.

So... what happens next?

What do you do when you have a profitable enterprise (bringing in between $20 and $30 million per year) that is on the verge of a potentially devastating legal ruling which could put you out of business?

Why, you turn to the court of public opinion, of course!

And you spin. Spin, spin, spin. Spin like the wind!

Here is a statement from Brewster Kahle, founder of The Internet Archive", who is working to frame this as a fight for the rights of Libraries:

"Resolving this should be easy—just sell ebooks to libraries so we can own, preserve and lend them to one person at a time. This is a battle for the soul of libraries in the digital age."

A battle for the soul of libraries! Woah! The soul?!

That's an intense statement -- clearly crafted to elicit an emotional response. To whip people up.

But take another look at the rest of that statement. The Internet Archive founder says that resolving this case "should be easy". And he provides a simple, easy-to-follow solution:

"just sell ebooks to libraries so we can own, preserve and lend them to one person at a time"

Go ahead. Read that again. At first it makes total sense... until you realize that it has almost nothing to do with this specific case.

Let's ignore the "one person at a time" statement, which is a well established lie (the Internet Archive proudly distributed digital copies of physical books to anyone who wanted them, not "one at a time").

But take a look at this proposed resolution... note that it has very little to do with the actual case. The case is about the digitizing of physical books, and distributing those digital copies without permission of the copyright holder. This proposed resolution is about... selling eBooks to lenders.

Yes. Both have to do with eBooks. And, yes, both have to do with lending eBooks.

But that is where the similarities end. And the differences, in this case, are absolutely critical.

Let's take a look at the actual ruling -- which The Internet Archive is attempting to appeal:

"At bottom, [the Internet Archive’s] fair use defense rests on the notion that lawfully acquiring a copyrighted print book entitles the recipient to make an unauthorized copy and distribute it in place of the print book, so long as it does not simultaneously lend the print book. But no case or legal principle supports that notion. Every authority points the other direction."

The Internet Archive's publicly proposed resolution does not address this ruling at all. Which means that, when talking to the public, The Internet Archive is being dishonest about this case.

But they are using flowery language -- "battle for the soul of libraries" -- so they'll likely manage to convince many people that they're telling the truth and representing the facts of the case fairly and honestly. Even if they are not.

There Are Important Disagreements Here

None of which is to say that the points which The Internet Archive is making... are necessarily wrong.

From the announcement of their appeal, the Archive states the following:

"By restricting libraries’ ability to lend the books they own digitally, the publishers’ license-only business model and litigation strategies perpetuate inequality in access to knowledge."

While this statement is designed to evoke specific feelings and responses -- among specific political demographics (see: "perpetuate inequality") -- there is an underlying set of issues here that are worth thinking about.

- Is it important that libraries be able to lend official digital editions of books?

- Should publishers, authors, and other copyright holders be forced to supply digital versions of their written works to libraries?

- If digital works, borrowed from a library, are then copied and distributed more than the rights allow... who is ultimately responsible for that? The library? The creator of the software system which facilitated the lending? Nobody at all?

- Should Libraries or Publishers be able to censor or modify digital works... or should a published digital work be maintained as it is at time of publication? (This issue comes up a lot when talking about censorship and revisions of works.)

These are legitimate questions. And, while the answers may appear obvious, there truly are distinct disagreements among publishers, authors, and libraries.

Some of these issues are raised by The Internet Archive, BattleForLibraries.com, and others.

But none of these questions -- not one -- are part of the ruling in "Hachette v. Internet Archive".

The question that has been answered in this case is simply:

- If you buy physical media (such as a book), can that media be digitized and distributed on the Internet (without authorization or notification of the copyright owner)?

And the answer is, thus far, a resounding... "No".

The Can of Worms

What happens if the judge chooses to uphold the existing judgment against The Internet Archive?

A number of things seems possible (with some seeming like a downright certainty).

- Publishers, authors, and copyright holders of works distributed by The Internet Archive may choose to seek damages. Which could put The Internet Archive in a precarious financial position (to say the least).

- The Internet Archive may be forced to remove other content of questionable copyright. Including software, video, and audio archives.

- Other archival projects may now come under increased scrutiny... thus making it riskier to archive and distribute various types of material.

- And, of course, The Internet Archive could attempt to appeal the case ever higher. Which may be tricky.

Then again... The Internet Archive could win this appeal.

Unlikely. But, hey, weirder things have happened.